Remote Labor Index:

Measuring AI Automation of Remote Work

Introduction

The potential for AIs to automate human labor is a topic of significant interest and concern. While AIs have made rapid progress on research-oriented benchmarks of knowledge and reasoning, it remains unclear how these gains translate into real economic value and actual automation.

To address this gap, we introduce the Remote Labor Index (RLI), a broadly multi-sector benchmark comprising real-world, economically valuable remote-work projects designed to evaluate end-to-end agent performance in practical settings. Across evaluated frontier AI agent frameworks, performance sits near the floor, with a maximum automation rate of 2.5% on RLI projects.

These results help ground discussions of AI automation in empirical evidence, setting a common basis for tracking progress and enabling stakeholders to proactively navigate AI-driven labor automation.

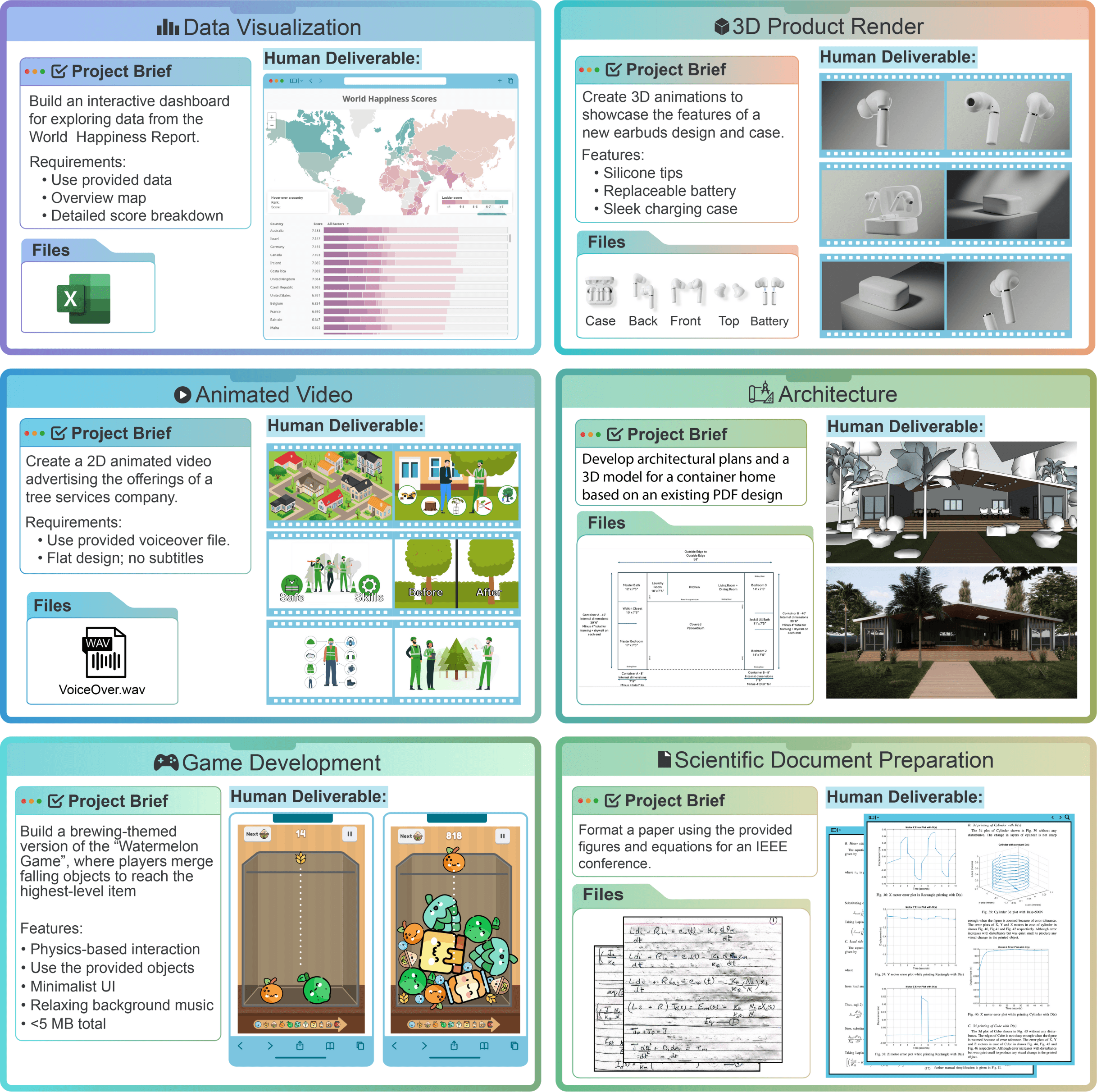

Example Projects from RLI

Remote Labor Index Overview

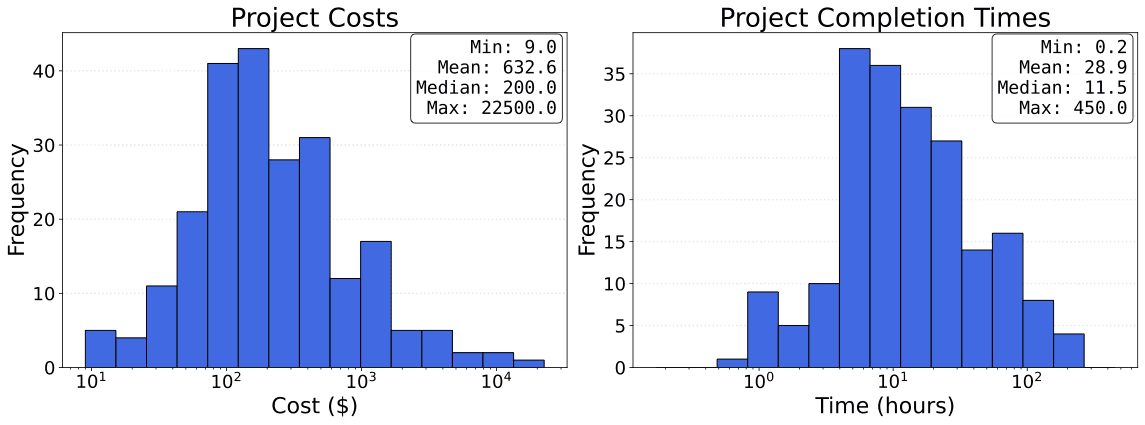

RLI represents a broad range of projects from across the remote labor economy, including game development, product design, architecture, data analysis, and video animation. These projects span a broad range of difficulty, with costs reaching over $10,000 and completion times exceeding 100 hours. All project costs and completion times come directly from human professionals who completed the work. In total, the projects in RLI represent over 6,000 hours of real work valued at over $140,000.

Evaluation Results

While AI systems have saturated many existing benchmarks, we find that state-of-the-art AI agents perform near the floor on RLI. The best-performing model achieves an automation rate of only 2.5%. This demonstrates that contemporary AI systems fail to complete the vast majority of projects at a quality level that would be accepted as commissioned work.

While absolute automation rates are low, our analysis shows that models are steadily improving and that progress on these complex tasks is measurable. This provides a common basis for tracking the trajectory of AI automation, enabling stakeholders to proactively navigate its impacts.

Frontier agents remain far from automating real remote-work projects.

Citation

@misc{mazeika2025remote,

title = {Remote Labor Index: Measuring AI Automation of Remote Work},

author = {Mantas Mazeika and Alice Gatti and Cristina Menghini and Udari Madhushani Sehwag and Shivam Singhal and Yury Orlovskiy and Steven Basart and Manasi Sharma and Denis Peskoff and Elaine Lau and Jaehyuk Lim and Lachlan Carroll and Alice Blair and Vinaya Sivakumar and Sumana Basu and Brad Kenstler and Yuntao Ma and Julian Michael and Xiaoke Li and Oliver Ingebretsen and Aditya Mehta and Jean Mottola and John Teichmann and Kevin Yu and Zaina Shaik and Adam Khoja and Richard Ren and Jason Hausenloy and Long Phan and Ye Htet and Ankit Aich and Tahseen Rabbani and Vivswan Shah and Andriy Novykov and Felix Binder and Kirill Chugunov and Luis Ramirez and Matias Geralnik and Hernán Mesura and Dean Lee and Ed-Yeremai Hernandez Cardona and Annette Diamond and Summer Yue and Alexandr Wang and Bing Liu and Ernesto Hernandez and Dan Hendrycks},

year = {2025},

eprint = {2510.26787},

archivePrefix = {arXiv},

primaryClass = {cs.LG},

url = {https://arxiv.org/abs/2510.26787}

}